On March 27, 2024, the U.S. Cybersecurity and Infrastructure Security Agency’s (“CISA”) Notice of Proposed Rulemaking (“Proposed Rule”) related to the Cyber Incident Reporting for Critical Infrastructure Act of 2022 (“CIRCIA”) was released on the Federal Register website. The Proposed Rule, which will be formally published in the Federal Register on April 4, 2024, proposes draft regulations to implement the incident reporting requirements for critical infrastructure entities from CIRCIA, which President Biden signed into law in March 2022. CIRCIA established two cyber incident reporting requirements for covered critical infrastructure entities: a 24-hour requirement to report ransomware payments and a 72-hour requirement to report covered cyber incidents to CISA. While the overarching requirements and structure of the reporting process were established under the law, CIRCIA also directed CISA to issue the Proposed Rule within 24 months of the law’s enactment to provide further detail on the scope and implementation of these requirements. Under CIRCIA, the final rule must be published by September 2025.

The Proposed Rule addresses various elements of CIRCIA, which will be covered in a forthcoming Client Alert. This blog post focuses primarily on the proposed definitions of two pivotal terms that were left to further rulemaking under CIRCIA (Covered Entity and Covered Cyber Incident), which illustrate the broad scope of CIRCIA’s reporting requirements, as well as certain proposed exceptions to the reporting requirements. The Proposed Rule will be subject to a review and comment period for 60 days after publication in the Federal Register.

Covered Entities

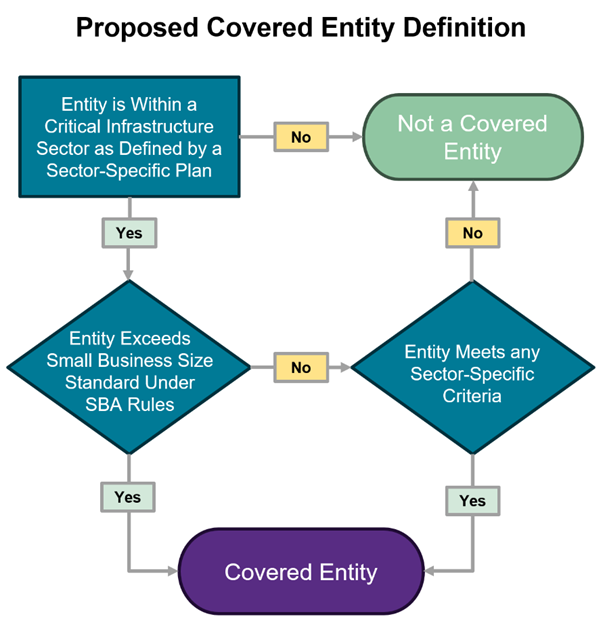

CIRCIA broadly defined “Covered Entity” to include entities that are in one of the 16 critical infrastructure sectors established under Presidential Policy Directive 21 (“PPD-21”) and directed CISA to develop a more comprehensive definition in subsequent rulemaking. Accordingly, the Proposed Rule (1) addresses how to determine whether an entity is “in” one of the 16 sectors and (2) proposed two additional criteria for the Covered Entity definition, either of which must be met in order for an entity to be covered. Notably, the Proposed Rule’s definition of Covered Entity would encompass the entire corporate entity, even if only a constituent part of its business or operations meets the criteria. Thus, Covered Cyber Incidents experienced by a Covered Entity would be reportable regardless of which part of the organization suffered the impact. In total, CISA estimates that over 300,000 entities would be covered by the Proposed Rule.

Decision tree that demonstrates the overarching elements of the Covered Entity definition. For illustrative purposes only.Continue Reading CISA Issues Notice of Proposed Rulemaking for Critical Infrastructure Cybersecurity Incident Reporting